https://github.com/GandhiGames/unity_game_foundations

What is it?

The audio queue is an implementation of an event queue. An event queue holds a queue of events/messages/any object that requires processing. Using this technique, you decouple when an event is sent from when it is processed.

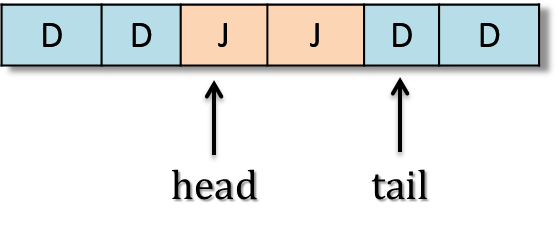

The events are stored in a FIFO order using a circular buffer.

When the buffer is filled, new data is written starting at the beginning of the buffer and overwriting the old. A reference to the head (where to add data) and tail (where to retrieve data) are maintained. This avoids the need for dynamic allocation, and no unnecessary shifting of elements that would occur using in-built lists.

Audioclips are added to the queue using an event system (see this post for more information on the event system). However, you can add audio to the queue in any manner you see fit.

Why should you use it?

Decoupling! In our audio example, systems (such as enemy AI, environment etc) can simply add an audio clip to the queue. They do not need to know how to play clips. The sender and receiver are decoupled.

The systems adding audio clips to the queue are not blocked while the program attempts to play the audio clip. It adds the clip to the queue and then continues on its merry way, knowing that it is no longer responsible for the clip.

By implementing an audio queue, you can prevent the same audio clips being played in the same frame. For example, in a shooter, if you’re surrounded by ten enemies and they all fire at you at the same time, then ten requests to play the same clip are initiated. Without some way to handle this, the clips waveforms are added together and the shooting audio clip sounds ten times louder than you intended. Ouch! The audio queue can check for existing clips of the same type and only play the one.

You can easily manage the resources of your audio engine by limiting the number of clips that can be added to the queue. Useful for mobile and platforms without many resources. You can also implement the audio system on a separate thread to increase performance further.

Implementation

The full code listing for the audio queue is reproduced below. It can also be found on GitHub (link included at the top of the page).

How do you use it?

Although this article is not about the event system that audio queue uses, I thought it could prove useful to include a quick example of how events work in relation to the queue.

All audio events inherit from the same interface.

Example of a concrete audio class.

Separate events are created for 2D and 3D data. The 3D audio clips also include spatial data in the form of a vector representing where the clip originated.

Once created, the events can be raised by any class as shown below (as long as the event scripts have been imported from the above GitHub project).

And that’s it! The audioclip will be added to the audio queue. As always, thank you for reading 🙂