Genetic Algorithms

https://github.com/thatgamesguy/that_genetic_algorithm

0 forks.

1 stars.

0 open issues.

Recent commits:

- new np, Robert Wells

- refractored, Robert Wells

- refractored, Robert Wells

- refractored, Robert Wells

- good results with 100+ epochs, Robert Wells

As biological neurons provide inspiration for their artificial counterpart, biological evolution has inspired the creation of genetic algorithms. Evolutionary computing provides an alternative to traditional search techniques and can find a solution to a problem “by selecting from a number of potential solutions…realising new solutions not previously considered”.

In biological evolution, a population of individuals in a species represents a generation; members of this population mate to produce a new generation with characteristics of both of its parents. This process allows the species to survive and adapt to changes in the environment, albeit very slowly.

Evolutionary computing is based on this process. By using this technique, it is possible to create software that adapts itself overtime to find increasingly more suitable solutions to a problem. The potential solutions represent the population of individuals. The solutions are crossed over to produce new solutions, which are then tested. With each new generation generally representing an improved solution.

Genetic Algorithms (GA’s) are a specific type of evolutionary computing paradigm common to artificial life simulations. Solutions to problems are encoded in a structure called a chromosome (or genome). The solution can be represented as binary digits (bits) or in any other computer readable format (e.g. floating-point weights of a Neural Network). Similarly, to biological evolution, new generations are created by mating two chromosomes and (possibly) applying mutation to produce a new offspring and consequently a new solution.

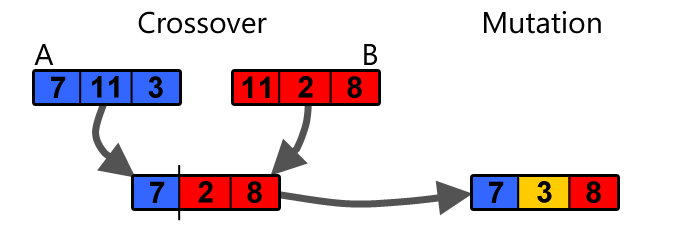

Chromosomes A and B are mated to produce an offspring. The crossover point can be dynamically selected or pre-determined; in the diagram the first block of A is crossed over with the second and third block of B. This produces a new offspring with characteristics of both parents. Mutation is not usually performed after every crossover; rather it is performed based on a mutation rate. During mutation, one or more digits is selected and changed in some manner e.g. if the chromosome is encoded in binary digits the digits are reversed.

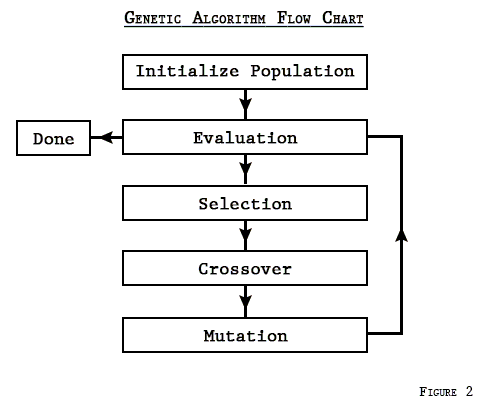

A general process for a GA is shown in the diagram below.

There are a number of methods used to select individuals for crossover and mutation; however, fitness proportionate selection is commonly used in artificial life simulations due to its parallels with nature. Using this method, the fitter an individual (based on their fitness) the higher the probability of selection. However, it does not guarantee that the fittest individual will be selected.

The problem being solved by a GA is defined by its fitness function. Individuals are evolved to have the best fitness values possible. The fitness function is used to evaluate the relative performance of possible solutions and is normally defined as a numerical, problem-independent, value. There is no general function; it must be tailored for each application.

Implementation

A part of the main genetic algorithm loop is included below. This loop is responsible for evolving the ufos in the demo scene to (hopefully) learn that they survive longer if they don’t crash into each other. I’ll go through the complete project in a future article but for now you can download it from the GitHub link at the top of the page.

bool GeneticAlgorithm::PoolSort(std::shared_ptr<C_GeneticAgent> a, std::shared_ptr<C_GeneticAgent> b)

{

return a->GetTimeAlive() > b->GetTimeAlive();

}

void GeneticAlgorithm::Update(float deltaTime)

{

std::vector<std::shared_ptr<Object>>& objects = agents.GetObjects();

for(auto& o : objects)

{

auto geneticAgent = o->GetComponent<C_GeneticAgent>();

if(geneticAgent != nullptr && geneticAgent->GetEnergy() <= 0)

{

AddToPool(geneticAgent);

CalculateTotalFitness();

if(pool.size() > 1)

{

int parentOne = FitnessProportionateSelection();

int parentTwo = FitnessProportionateSelection();

std::shared_ptr<C_GeneticAgent> agent = CreateAgentFromCrossOver(pool[parentOne], pool[parentTwo]);

float mutation = static_cast <float> (rand()) /( static_cast <float> (RAND_MAX));

if (mutation < mutationChance)

{

NeuralNetwork network = agent->GetNeuralNetwork();

network.MutateExchange();

agent->SetNeuralNetwork(network);

}

if(++addedToSimulation % maxPoolSize == 0)

{

LogToFile();

addedToSimulation = 0;

}

}

o->QueueForRemoval();

}

}

}

void GeneticAlgorithm::AddToPool(std::shared_ptr<C_GeneticAgent> agent)

{

pool.push_back(agent);

std::sort(pool.begin(), pool.end(), PoolSort);

if(pool.size() > maxPoolSize)

{

pool.erase(pool.end() - 1);

}

}

void GeneticAlgorithm::CalculateTotalFitness()

{

totalFitnessScore = 0;

for (int i = 0; i < pool.size(); i++)

{

totalFitnessScore += pool[i]->GetTimeAlive();

}

}

int GeneticAlgorithm::FitnessProportionateSelection(int exclusion)

{

float randomSlice = static_cast <float>(rand()) / (static_cast <float>(RAND_MAX / totalFitnessScore));

std::shared_ptr<C_GeneticAgent> choosenAgent = nullptr;

float fitnessTotal = 0;

for (int i = 0; i < pool.size(); i++)

{

if(i == exclusion)

{

continue;

}

fitnessTotal += pool[i]->GetTimeAlive();

if (fitnessTotal >= randomSlice)

{

return i;

}

}

return -1;

}

std::shared_ptr<C_GeneticAgent> GeneticAlgorithm::CreateAgentFromCrossOver(const std::shared_ptr<C_GeneticAgent> parentOne, const std::shared_ptr<C_GeneticAgent> parentTwo)

{

NeuralNetwork neuralNetwork = parentOne->GetNeuralNetwork();

if(parentOne != parentTwo)

{

neuralNetwork = CreateNeuralNetworkFromCrossOver(parentOne->GetNeuralNetwork(), parentTwo->GetNeuralNetwork());

}

auto agent = AddAgentToSimulation();

agent->SetNeuralNetwork(neuralNetwork);

return agent;

}

NeuralNetwork GeneticAlgorithm::CreateNeuralNetworkFromCrossOver(const NeuralNetwork& networkOne, const NeuralNetwork& networkTwo)

{

std::vector<float> parentOneWeights = networkOne.GetWeights();

std::vector<float> parentTwoWeights = networkTwo.GetWeights();

std::vector<int> crossoverPoints = networkOne.GetSplitPoints();

int crossOverIndex = (rand() % static_cast<int>(crossoverPoints.size()));

int crossOverPoint = crossoverPoints[crossOverIndex];

std::vector<float> newWeights;

for (int i = 0; i < crossOverPoint; i++)

{

newWeights.push_back(parentOneWeights[i]);

}

for (int i = crossOverPoint; i < parentOneWeights.size(); i++)

{

newWeights.push_back(parentTwoWeights[i]);

}

int numOfInput = networkOne.numOfInput;

int numOfHiddenLayers = networkOne.numOfHiddenLayers;

int numOfNeuronsInHiddenLayer = networkOne.numOfNeuronsInHiddenLayers;

int numOfOutput = networkOne.numOfOutput;

NeuralNetwork neuralNet(numOfInput, numOfHiddenLayers, numOfNeuronsInHiddenLayer, numOfOutput);

neuralNet.SetWeights(newWeights);

return neuralNet;

}This was just a brief introduction to genetic algorithms and my project. Shortly I will be writing a more in-depth tutorial for the project, that will go step-by-step through how it was created and how we will be extending it in future.

As always, thank you for reading